- INTRODUCTION

- ASPECTS RELATED TO PROJECT CODE

- DESIGN/REVISION OF THE PROJECT INFRASTRUCTURE

- HOW CAN WE HELP?

INTRODUCTION

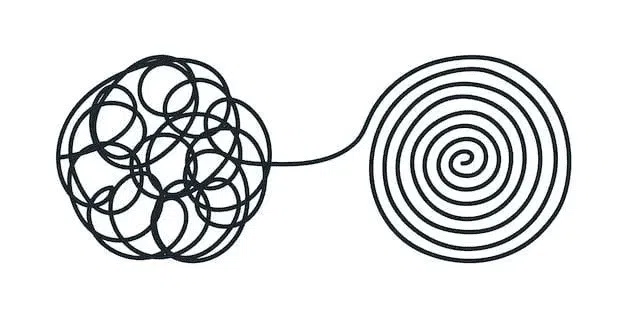

Your datascience project has grown to a certain level and has begun to gain momentum. But you are faced with the fact that releases are being delayed more and more, the number of bugs is increasing, and the team cannot cope with them. Let's figure out what can be done about this?

Typically, such problems arise when a project transitions to preproduction or production mode from rapid prototyping mode due to the need to change the organizational and technical basis of the project. In this article, we tried to highlight and classify the main points that, in our opinion, will help to restructure the project, change the trend from accumulating technical debt to reducing it, and also make the technical part of the project again predictable and manageable for management.

ASPECTS RELATED TO THE PROJECT CODE

Often datascience specialists care little about code quality and/or performance. This approach is fully justified at the research stage, when creating an MVP, or when the code is written alone (without a team). However, as the project develops, when the team expands, or when preparing production code, such “creative chaos” can lead to problems.

To be fair, it’s worth saying that working with code is more focused on the medium and long term, because The amount of work is usually large and results are difficult to obtain in a week or two. But nevertheless, if you do not start doing this as planned, the project may become unmanageable.

Below we will look at some specific examples where the help of an advanced Python developer with datascience expertise comes in handy.

- BREAKING THE DATASCIENCE CODE TO PRODUCTION-QUALITY STATE

*** AS A RULE, DATASCIENCE SPECIALISTS ARE FIRST RESEARCHERS, NOT PYTHON DEVELOPERS. THEY ALSO WORK LESS LESSLY IN LARGE TEAMS WHEN CODE QUALITY IS PAID MORE ATTENTION.***

- using meaningful names for variables and functions

- use of OOP (for example, inheritance from base classes)

- following the DRY principle

- Exception Handling

- running python scripts with parameters from the command line

- data type annotation

- documenting functions and commenting code

- code verification with generally accepted standards (PEP-8) using code linters and formatters

- TESTS

***RARE GUEST IN THE DATASCIENCE CODE. THE ROLE OF TESTS INCREASES IN THE LONG TERM AND ALSO WHEN CREATING COMPLEX SYSTEMS.***

- using the capabilities of test frameworks (pytest, unittest)

- using coverage to track the level of code coverage by tests

- PERFORMANCE OPTIMIZATION

***WHEN TRANSPORTING CODE TO A SERVER, PERFORMANCE ISSUES MAY ARISE THAT WOULD NOT EXIST WITH LOCAL DEVELOPMENT.***

- code profiling (memory profiling + cpu profiling)

- python code optimization (best practices for numpy, scipy, pandas)

- optimization of AI/ML/DeepLearning components (migration to more modern libraries)

- using Intel Math Kernel Library (MKL) libraries

- GPU COMPUTING

***GPUs ARE USED TO ACCELERATE COMPUTING.***

- solving related memory management problems (especially when using tensorflow)

- CREATION OF A PYTHON LIBRARY BASED ON CODE

***OFTEN A SITUATION ARISES WHEN THE SAME DATASCIENCE CODE IS NEEDED IN DIFFERENT CUSTOMER PROJECTS, I.E. SO THE CODE CAN BE SIMPLY IMPORTED.***

- creating library elements (pyproject.toml, requirements.txt, etc.)

- publishing and installing from a directory or installing from a code repository

- INTEGRATION WITH CLOUD SERVICES

***MANY PRODUCTION SOLUTIONS ARE BASED ON THE USE OF CLOUD SERVICES.***

- upload/download files to/from AWS S3 or Google Drive directly from code

- INTERACTION WITH THE DEVOPS TEAM (GIT/JENKINS/DOCKER)

***WHEN INTEGRATING JENKINS/SONARQUBE, WHEN CREATING A DOCKERFILE, WHEN CONFIGURING MICROSERVICES, THE PARTICIPATION OF A DEVELOPER IS ALMOST ALWAYS NECESSARY.***

- setting up GitHub/GitLab checks

- creating Dockerfile/Jenkinsfile

- forming a versioning policy

Example 1

The project needed to minimize the time between sending the text of a message before the start of audio streaming of this message (Text-to-Speech (TTS) process). Optimizing the Python code and parallelizing the process gave a good effect, but it was still not enough. MKL libraries came to the rescue when, without changing the code, the TTS time of the process decreased by a third, and the performance of individual matrix calculations improved by 60-80%.

DEVELOPMENT/REVISION OF THE PROJECT INFRASTRUCTURE

A serious drawback and risk generator can be a suboptimal project infrastructure.

In our opinion, when auditing infrastructure, you need to pay attention to the following points:

- NEED FOR FORECASTING INFRASTRUCTURE LOAD

***Sooner OR LATER ANY GROWING SERVICE HAS TO EVALUATE ITS TECHNICAL CAPABILITIES. THIS FIRST AFFECTS THE LARGEST AND EXPENSIVE SERVICES TO SCAL.***

- carrying out load testing

- using CDN to relieve the load

- IF FORECASTED HOSTING COSTS EXCEED $100,000 PER YEAR, A DEEP ANALYSIS OF INFRASTRUCTURE USE MODES IS MANDATORY

***DIFFERENT KINDS OF LIMITS MAY ANNOY YOU, BUT DURING SAVINGS THEY WILL SAVE YOU FROM ACCIDENTAL OVERCONSUMPTION AND UNPLANNED SPENDING.***

- more modest configuration

- resource quotas (upper threshold for resource consumption)

- fine-tuning specific services (databases, message brokers, search engines, etc.)

- more complex solutions with the participation of DevOps engineers (for example, implementing a schedule of data operations taking into account the load)

- CORRECT APPROACH TO SCALING

***DO YOU NEED AWS, GCE OR OTHER CLOUD PROVIDER NOW? YES, FASHIONABLE, YOUTH, SCALABLE, BUT COSTS 10 TIMES OF SOLUTIONS FOR EXAMPLE BASED ON HETZNER.COM HOSTING.***

- assessing potential traffic (analyzing bursts of activity in the past and predicting them in the future)

- If your load involves 5,000 simultaneous users, it may be worth choosing a cheaper provider (while preparing a quick transition to a scalable solution if the project is successful)

- INFRASTRUCTURE SECURITY AUDIT

***IT MAY BE FUNNY, BUT PASSWORDS LIKE “123456” OR “ADMIN” ARE STILL IN USAGE, SIGNIFICANTLY REDUCING SECURITY.***

- backup (frequency and rules)

- passwords and access keys

- server uptime

- physical security of servers in data centers and hosting guarantees in case of emergencies

- INFRASTRUCTURE PORTABILITY AUDIT

***YES, EVERYONE LOVES FASHIONABLE THINGS. PROGRAMMERS AND ANALYSTS ARE NO EXCEPTION. BUT HAVE YOU THOUGHT ABOUT WHAT WILL HAPPEN TO YOUR PROJECT, FOR EXAMPLE ON BIG QUERY, IF YOU ARE UNDER SANCTIONS? OR WHAT IS PRESENTING CLOUD PROVIDERS, IN THE CONDITIONS OF ELECTRONICS SHORTAGE, TO RAISE THE PRICE FOR HOSTING BY 10 TIMES? SUCH RISKS WOULD BE GOOD TO BE CONSIDERED ALSO.***

- An alternative to Western cloud services could be, for example, sbercloud

- CREATION OF A MONITORING SYSTEM

*** AN EFFICIENT MONITORING SYSTEM ALLOWS YOU TO PROMPTLY IDENTIFY FAILURES IN SERVER OPERATION.***

- monitoring of servers and networks (if necessary, use of your own metrics, as well as configuration taking into account geo-distributed infrastructure) using prometheus and/or zabbix

- monitoring errors in code (sentry is great for python projects)

Example 2

The project suddenly discovered that a significant percentage of the allocated funds was needed to support the infrastructure. In order to save money, the option of reducing the development team was seriously considered. However, the project at that moment had not yet reached peak load, and a solution was found in switching to the services of a cheaper cloud provider (albeit at the expense of scalability). This bought time so that the full development team could find a more streamlined approach to infrastructure hosting.

ORGANIZATIONAL SOLUTIONS

A very important point, but we will leave this as a topic for the next article.

HOW CAN WE HELP?

Do you want your datascience project to be better? Our team is confident that we will help bring order to your project: prepare the code to production and optimize infrastructure costs! Options for cooperation may include providing individual specialists to join the project for a long time, or a one-time audit. It is also possible to provide a team of specialists.

An approximate algorithm of our actions, depending on the customer’s needs, may include:

- Signing the NDA

- Preliminary code audit (3 hours of developer time, free) to assess the expected further time costs

- Code audit (40 - 80 hours of senior developer’s time)

- Infrastructure audit (20 - 40 hours of time System Architecht + DevOps engineer)

- Preparation of an action plan aimed at improving the status of the project (10 - 20 hours)

- Implementation of the plan

You will be required to provide access to the project code and infrastructure, as well as provide load data.

Author of the article: Pavel D. Technical Director (CTO) 54origins | Have a question?Ask! If you need help organizing work on a Data Science project, You can get a free consultation from our CTO

|

Also read:

- Opportunity to use face recognition for authentication using Python

- How to build comprehensive and efficient testing of a project in the python stack