- The user speaks a phrase.

- ASR (Automatic Speech Recognition) converts speech into text.

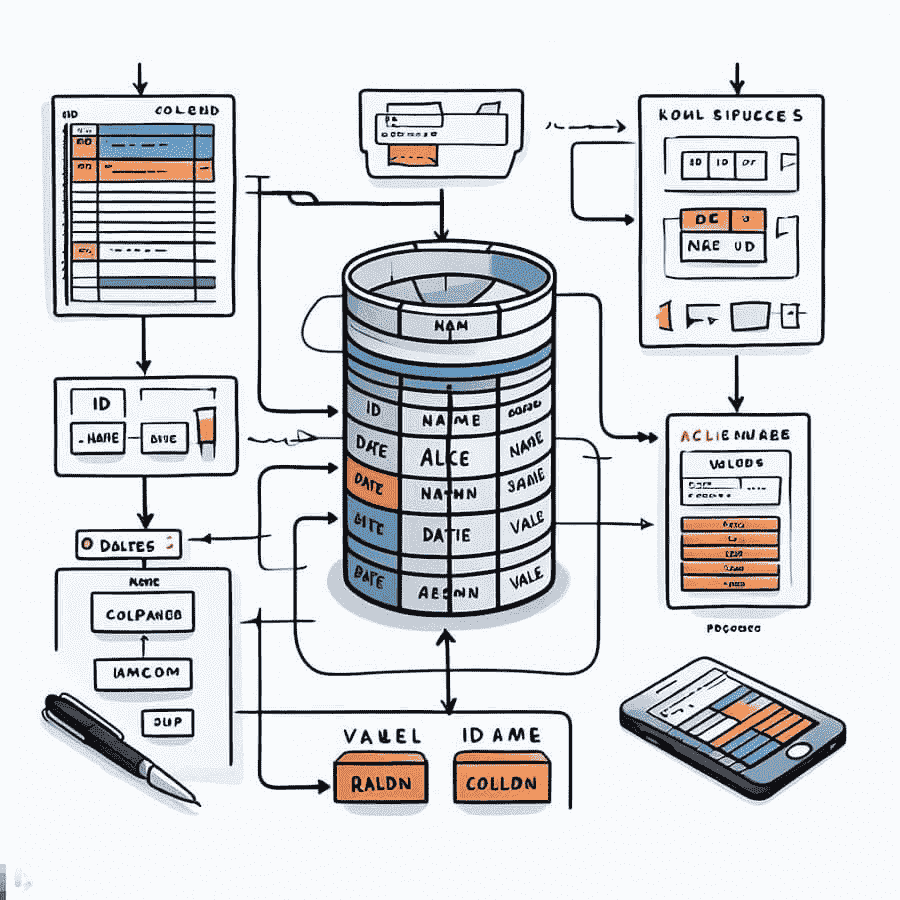

- The text is passed to the intent classifier, which must determine what action the user asked Alice to do.

3.1 If the intent classifier cannot confidently determine what the user wants:

- The Binary classifier decides whether the replica should be sent to the search engine. If necessary, it returns search results.

- If it is not necessary, then it redirects the reply to the chat room. The task of the chatter is to give an answer that is appropriate in the given context of the dialogue.

3.2 If the intent classifier has determined what the user wants:

- The Semantic tagger extracts from the replica the useful data that is needed for a given intent in order to generate a response.

- This data is sent to the conversation manager, which has the current dialogue context and what happened previously.

- The conversation manager generates the response.

- TTS (Text to Speech) speaks the response.

Dialogue Manager:

- Doesn't use ML.

- The concept of form-filling: the user, with his remarks, provides information to fill out a certain virtual form. Over time, the form is completed and the data is used to generate responses.

- Event-driven engine: every time the user does something, events occur that you can subscribe to and thus construct dialogue logic.

Intent classifier:

- The model uses the nearest neighbors method.

Semantic tagger:

- LSTM is used.

- It is not the most probable hypothesis that is generated, but several of the most probable hypotheses. They are re-weighted depending on the context of the dialogue, which helps improve quality.

Chatter:

- DSSM-like neural network. One encoder encodes the current conversation context, the other encoder encodes the candidate response. The network is trained so that the cosine distance between these vectors is as large as possible (this means that the answer best fits the context).